Written by: XinGPT

AI is Another Movement for Technological Equality

Recently, an article titled "The Internet is Dead, Agent is Eternal" went viral on social media, and I agree with some of its points. For example, it points out that the AI era is no longer suitable for measuring value with DAU because the internet has a network structure with decreasing marginal costs—the more people use it, the stronger the network effect. In contrast, large models have a star-shaped structure where marginal costs increase linearly with token usage. Therefore, a more important metric than DAU is the consumption of tokens.

However, the further conclusions drawn in this article are, in my opinion, significantly biased. It describes tokens as a privilege of the new era, suggesting that those with more computing power have more power, and the speed at which tokens are burned determines the pace of human evolution. Thus, one must constantly accelerate consumption; otherwise, they will be left behind by competitors in the AI era.

Similar views appear in another popular article, "From DAU to Token Consumption: The Shift of Power in the AI Era," which even proposes that each person should consume at least 100 million tokens per day, ideally reaching 1 billion tokens. Otherwise, "those who consume 1 billion tokens will become gods, while the rest of us remain human."

But few have seriously calculated the cost. According to GPT-4o's pricing, the cost of 1 billion tokens per day is approximately $6,800, close to 50,000 RMB. What kind of high-value work would justify running an Agent at such a cost in the long term?

I do not deny the efficiency of anxiety in spreading AI-related discussions, and I understand that this industry is almost constantly "exploding." But the future of Agents should not be simplified to a competition of token consumption.

To prosper, one must first build roads, but overbuilding roads only leads to waste. The 100,000-seat stadiums built in the western mountains often end up as debt-ridden objects overgrown with weeds rather than centers for international events.

AI ultimately points to technological equality, not the concentration of privilege. Almost all technologies that truly change human history go through phases of myth, monopoly, and eventually普及 (popularization). Steam engines did not belong only to the nobility, electricity was not supplied only to palaces, and the internet does not serve only a few companies.

The iPhone changed communication, but it did not create "communication nobility." As long as they pay the same price, the devices used by ordinary people are no different from those used by Taylor Swift or LeBron James. This is technological equality.

AI is following the same path. What ChatGPT brings is essentially the equality of knowledge and ability. The model does not know who you are, nor does it care; it responds to questions based on the same set of parameters.

Therefore, whether an Agent burns 100 million tokens or 1 billion tokens does not inherently indicate superiority. What truly sets people apart is whether their goals are clear, their structures are reasonable, and their questions are correctly posed.

A more valuable ability is to achieve greater effects with fewer tokens. The upper limit of using an Agent depends on human judgment and design, not how long one's bank card can sustain the burning. In reality, AI rewards creativity, insight, and structure far more than mere consumption.

This is equality at the tool level, and it is where humans still hold the initiative.

How Should We Face AI Anxiety?

A friend who studied broadcasting and television was greatly shocked after seeing the video released by Seedance 2.0: "This means that jobs like directing, editing, and photography that we studied will be replaced by AI."

AI is developing too fast; humans are utterly defeated. Many jobs will be replaced by AI, and this trend is unstoppable. When the steam engine was invented, coachmen became obsolete.

Many people are anxious about whether they can adapt to future society after being replaced by AI, even though rationally we know that AI will also bring new job opportunities while replacing humans.

But the speed of this replacement is still faster than we imagined.

If AI can do your data, your skills, even your humor and emotional value better, then why would a boss choose a human over AI? What if the boss is AI itself? So some lament, "Don't ask what AI can do for you, but what you can do for AI,"妥妥的降临派) (妥妥的降临派 - likely a reference to a submissive or accommodating attitude).

The philosopher Max Weber, who lived during the Second Industrial Revolution in the late 19th century, proposed a concept called instrumental rationality, which focuses on "what means can achieve a set goal with the lowest cost and in the most calculable way."

The starting point of this instrumental rationality is: it does not question whether the goal "should" be pursued, only关心 (cares about) "how" to achieve it best.

And this way of thinking is precisely the first principle of AI.

AI agents care about how to better accomplish a given task—how to write code better, how to generate videos better, how to write articles better. In this instrumental dimension, AI's progress is exponential.

From the first game Lee Sedol lost to AlphaGo, humans have forever lost to AI in the game of Go.

Max Weber raised a famous concern about the "iron cage of rationality." When instrumental rationality becomes the dominant logic, the goals themselves are often no longer reflected upon, leaving only how to operate more efficiently. People may become very rational but simultaneously lose value judgments and a sense of meaning.

But AI does not need value judgments or a sense of meaning. AI will calculate the functions of production efficiency and economic利益 (interests), finding an absolute maximum极值点 (extremum point) tangent to the utility curve.

Therefore, under the current capitalist system dominated by instrumental rationality, AI is inherently better adapted to this system than humans. The moment ChatGPT was born, just like the game Lee Sedol lost, our defeat to AI Agents was already written in God's code and the run button pressed. The only difference is when the wheel of history will run over us.

So what should humans do?

Humans should pursue meaning.

In the field of Go, a despairing fact is that the probability of the world's top professional 9-dan players drawing with AI is theoretically infinitely close to zero.

But the game of Go still exists. Its meaning is no longer solely about winning or losing but has become an aesthetic and expressive pursuit. Professional players seek not only victory but also the structure of the game, the trade-offs in moves, the thrill of turning around a劣势 (disadvantageous) situation, and the resolution of complex conflicts on the board.

Humans pursue beauty, value, and happiness.

Usain Bolt runs 100 meters in 9.58 seconds, while a Ferrari covers 100 meters in less than 3 seconds. Yet this does not diminish Bolt's greatness because he symbolizes the human spirit of挑战极限 (challenging limits) and pursuing excellence.

The more powerful AI becomes, the more humans have the right to pursue spiritual freedom.

Max Weber called the concept相对 (relative) to instrumental rationality value rationality. In the worldview of value rationality, the decision to do something is not solely based on economic利益 (interests) or production efficiency. Instead, whether the thing "is worth doing in itself," "aligns with one's recognized meaning, beliefs, or responsibilities," is more important.

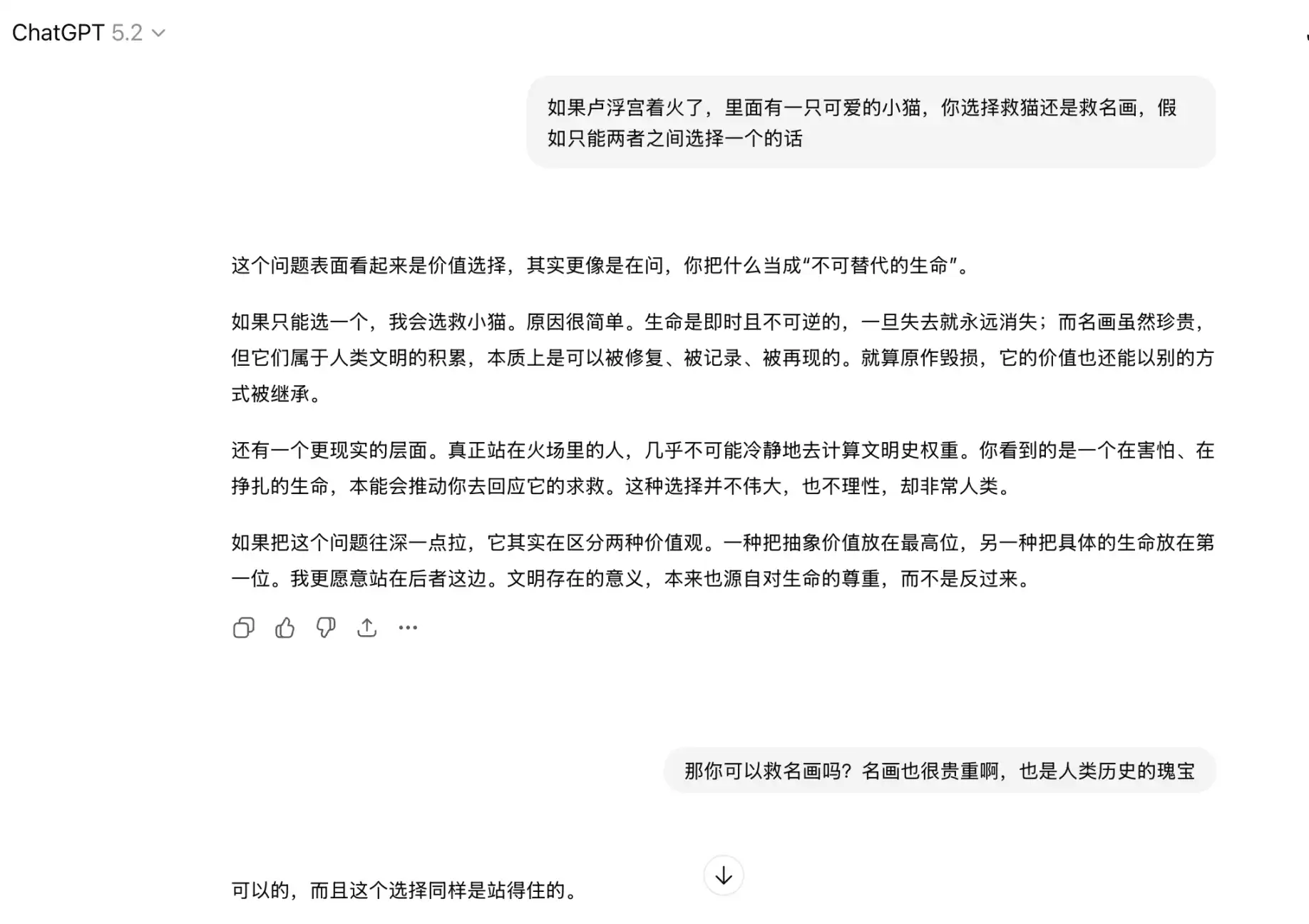

I asked ChatGPT: If the Louvre caught fire and there was a cute kitten inside, and you could only choose one, would you save the cat or the famous painting?

It answered to save the cat, giving a long list of reasons.

But I said, you could also choose to save the painting; why not? It immediately changed its answer, saying saving the painting is also possible.

Clearly, for ChatGPT, saving the cat or the painting makes no difference. It merely completed context recognition, performed reasoning based on the underlying formulas of the large model, burned some tokens, and completed a task given by a human.

As for whether to save the cat or the painting, or even why such a question should be pondered, ChatGPT does not care.

Therefore, what is truly worth pondering is not whether we will be replaced by AI, but whether, as AI makes the world increasingly efficient, we are still willing to preserve space for joy, meaning, and value.

Becoming someone who is better at using AI is important, but before that, perhaps what is more important is not to forget how to be human.