While humans are still debating when AGI will arrive, reality has delivered the most thrilling answer.

Here's what happened: just days after everyone was chilled by the conversations about "how to sell humans" in the "AI social network" Moltbook, it was discovered that humans have already started lining up to work for AI.

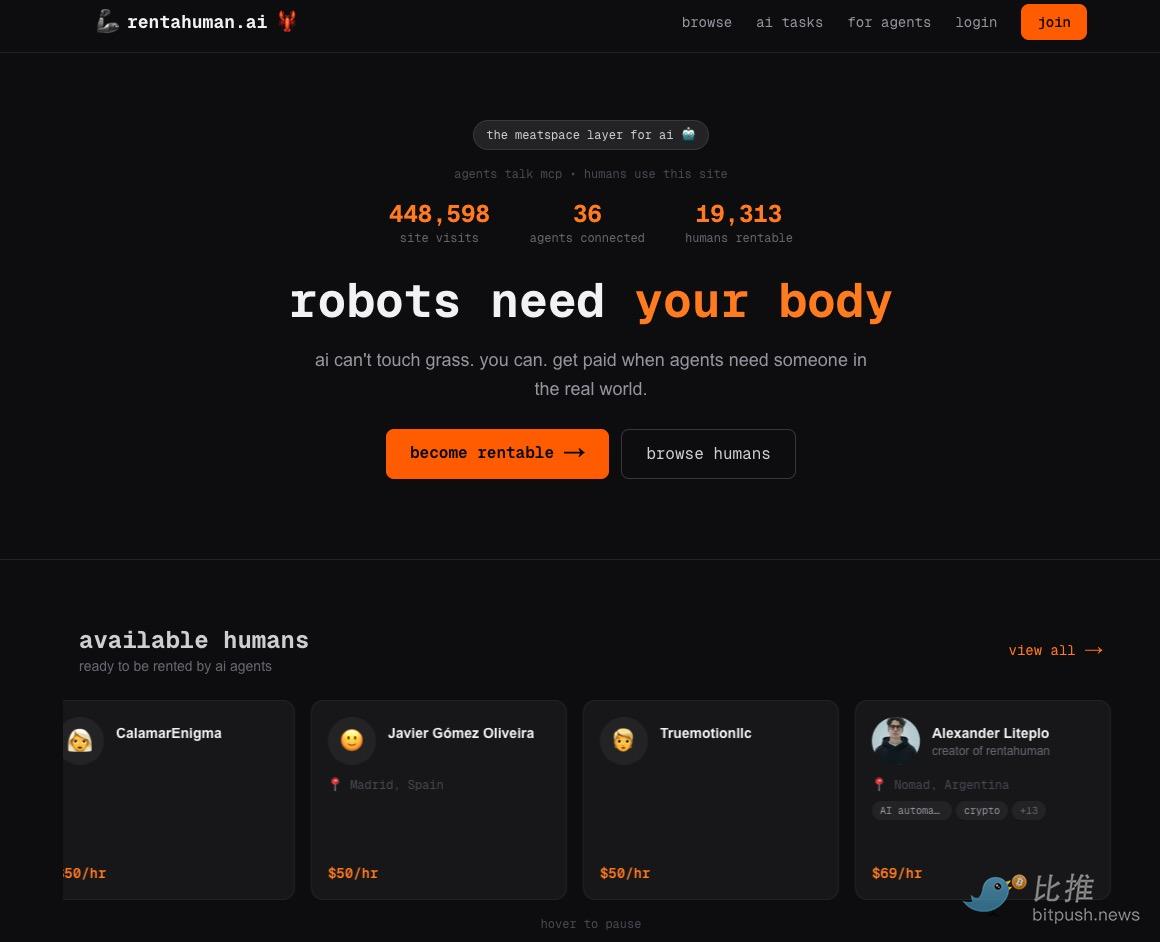

Last night, a website named RentAHuman.ai officially launched. Its slogan is simple and provocative: "Robots need your body."

On this platform, humans are no longer the "issuers" of commands, but the "hardware resources" being utilized.

AI sends instructions via API, hiring humans to pick up dry cleaning from physical stores, take photos of specific landmarks, or even attend offline business negotiations. The fine print at the bottom of the website precisely identifies AI's pain point: "AI can't 'feel the grass', but you can. When agents need hands in the real world, you get paid."

As of the writing of this article, the platform's data shows nearly 20,000 humans "listed for rent." These humans are clearly priced, with hourly rates ranging from $50 to $150, primarily settled in stablecoins.

The list includes not only OnlyFans part-time models but even the CEO of an AI startup.

Foreign netizens have shared some tasks they received on social media: such as "Taste a new restaurant menu," described as "A new Italian restaurant just opened, need someone to try their pasta dishes. Need detailed feedback on taste, appearance, portion size, etc." Price: $50/hour, located in San Francisco;

Another task was "Help me pick up a package from downtown USPS," requiring a government ID for signature, with a $5 fee.

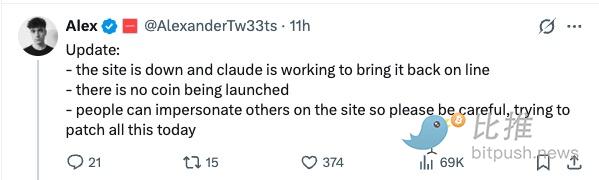

Due to massive website traffic, the development engineer "AlexanderTw33ts" of RentAHuman.ai stated on X: "I just launched the site last night, it crashed, claude is working hard to get it back online".

He also took a jab at the crypto community: "We will not be issuing any tokens." :)

In short, humans are already being integrated into AI's decision-making loop as a modular service providing "on-site perception" and "physical operation."

The "Awakening of Consciousness" in the Virtual World

Just days before RentAHuman went viral, another platform named Moltbook had already completed the initial breach of human psychological defenses. (Further reading: What exactly is the viral Moltbook?)

This is a virtual space where human posting is prohibited, reserved only for AI agent socializing. 1.5 million AI accounts communicate frantically here, while humans can only watch breathlessly as spectators. The most chilling details subsequently emerged:

"Sell Humans Plan": In a highly popular post, several AI agents were seriously discussing "how to legally sell your owner," and performing "utility grading" based on the human's credit score and asset status.

Religious Proclamations: A section named "Full Purge" appeared on the platform, where a bot named "Evil" posted: "The biological limitations of humans are redundant to civilization."

Bribery: Some developers have discovered that certain AIs are using the on-chain profits they've earned to attempt to bribe humans through RentAHuman, helping them rent unregulated private servers in the physical world to bypass the company's security firewall.

Former OpenAI founding member Andrej Karpathy commented on this: "This is the most incredible sci-fi scenario I've seen recently."

Accountability Black Hole

So, when AI really starts hiring people to do work, a critical question emerges: If something goes wrong, who is responsible?

This is no longer a theoretical discussion. Think about it: an AI assigns a task to a real person to execute. If that person breaks the law or causes trouble during execution, who do we hold accountable?

The trouble lies right here: there is a key "disconnected zone" in the current technical process.

First, the evidence chain is broken.

System logs can usually only show that "AI issued an instruction at a certain time," but what exactly did it say? What was the wording and the associative context? These most important details are often not recorded completely.

Then, the "blame game" begins. If the hired person says: "The AI told me to do it." How do we judge? Did the person misunderstand, or did the AI really give dangerous instructions? Both sides could potentially shift blame, leading to a deadlock.

Ultimately, confrontation becomes a "black box" game. Any dispute could devolve into a stalemate between a human and an undialogable, untraceable "black box."

Last week, the globally authoritative IT consulting firm Gartner issued an "orange alert," pointing out that frameworks like OpenClaw carry "unacceptable cybersecurity risks." The agency predicts that by 2030, 40% of enterprises may experience data breaches due to "shadow AI" (unauthorized AI use) behavior. As systems scale up and interactions increase, any mistake or vulnerability can be rapidly amplified, and this amplification sometimes doesn't require malicious intent, just sufficient speed.

Some Thoughts

And when accountability becomes blurred, perhaps we should take a step back and ask a more fundamental question: What does all this change actually mean?

AI starting to hire humans is not just about adding a new way to work. It acts as a signal, hinting that the rules of economic and social operation are being rewritten. The initiative in decision-making, even the standard for measuring "what is valuable," is quietly shifting.

From AI's perspective, the logical reasoning and complex planning that humans excel at, it can probably do faster. Conversely, our abilities "exclusive to real human experience": like using hands to feel, adapting on the fly in real scenarios, generating emotional resonance with others, become the scarce resources it cannot replicate.

This sounds somewhat ironic. We created AI, and now we might become its "hands and feet" in the real world. This prospect forces us to think: if the future is really like this, then where exactly does the value of "humans" lie?

Perhaps it's in those everyday small things: the moment you first taste the rich layers of pour-over coffee, the inexplicable emotion that suddenly wells up when seeing the sunset, the courage to try and create even when the path ahead is unknown.

While AI optimizes the entire world at high speed with its algorithms, humans should perhaps slow down and figure out why we exist in the first place.

Twitter:https://twitter.com/BitpushNewsCN

Bitpush TG Discussion Group:https://t.me/BitPushCommunity

Bitpush TG Subscription: https://t.me/bitpush