World's Richest Man, 'Silicon Valley Iron Man' Musk, to Take SpaceX Public in 2026.

Overall valuation is approximately $1.5 trillion (about RMB 10.6 trillion), and the planned financing scale will "significantly exceed $30 billion". If achieved, it will surpass Saudi Aramco's 2019 IPO ($29.4 billion) to become the world's largest IPO.

The grand goal of a $1.5 trillion valuation cannot rely solely on the old dream of Starlink and Starship's "stars and seas"; Musk has opportunistically crafted a new dream: Space Computing Power.

At the Baron Capital Annual Investor Conference on November 18, Musk publicly elaborated on the concept of "Space AI Computing Power" for the first time. "Within five years, running AI training and inference in space will become the lowest-cost solution."

Musk clearly stated that Earth's orbit has the never-setting sun providing free electricity; the cosmic vacuum is the ultimate heat sink; the reusability of Starship will significantly reduce the cost of transporting materials to Earth's orbital space.

However, to utilize space's environment and energy, computing power in space requires additional technological advancements, including radiation protection, heat dissipation, etc., which in turn raises economic feasibility questions—the very problem space-based computing was meant to solve economically.

A senior aerospace researcher quipped: "Placing user data under the狂暴solar wind冲刷, what he needs isn't a computer, it's a brain supplement."

01

The Core of the New Narrative: Space Computing Power

Musk's proposed "space data center" is not science fiction but directly targets the two major bottlenecks of current Earth-based AI infrastructure: energy costs and cooling costs.

The environmental characteristics offered by space make it a leapfrog alternative, primarily including the never-setting sun and the cosmic vacuum. The former provides almost free energy, while the latter is a natural "ultimate heat sink".

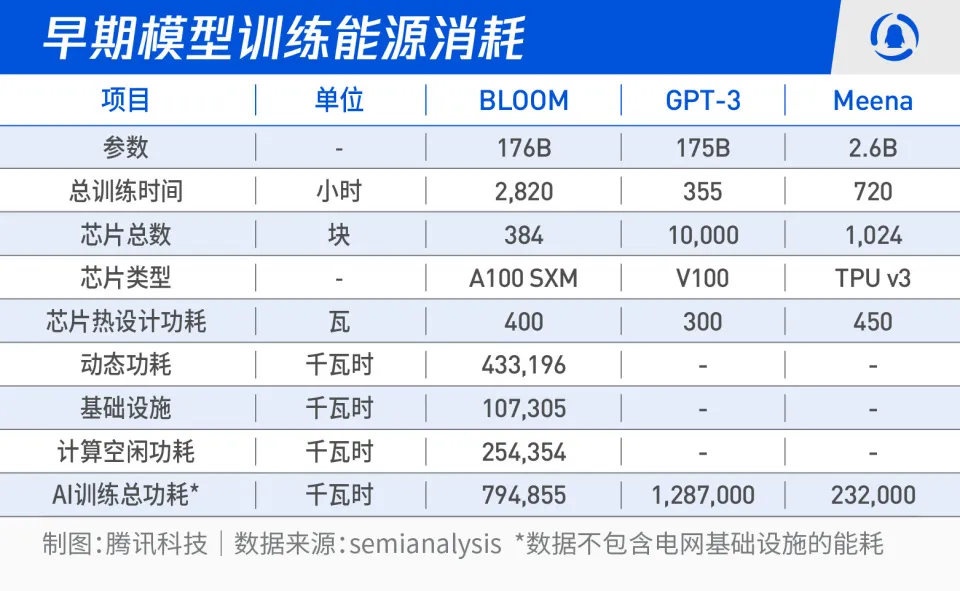

As AI models expand dramatically, energy has become the biggest pressure point in the computing economy. Training a model like GPT-5 could cost hundreds of millions of dollars, with electricity costs constituting a significant portion.

First, there is continuous solar resources—low orbit satellites circle the Earth about 16 times a day. Through orbital distribution and inter-satellite link scheduling, tasks can be switched in real-time to nodes in the sunlit zone, achieving nearly 24-hour energy supply.

Second, no land, power grid, or substation facilities are needed. Once deployed, the long-term cost of space-based solar power approaches zero.

Energy and infrastructure become cheap in space, but the heat dissipation problem must be solved. High-performance GPUs have extremely high thermal density, causing 30%–40% of a ground data center's electricity to be consumed by cooling.

In space, the vacuum environment, lacking convection and conduction, relies solely on thermal radiation for heat dissipation. For satellites on the sun-facing side, this is equivalent to "barbecue mode". Additionally, space radiation and high-energy particles could potentially disrupt high-performance computing processes, making them "fragmented".

"The computing chips you use on the ground likely won't work up there," emphasized the aforementioned researcher.

Another key element supporting SpaceX's space computing power story is Starship's launch capacity cost.

The economics of space computing power are built upon Starship's extremely low launch costs. Musk's planned Starship V3 (single payload ~100 tons) could, with high-frequency reuse, push the launch price down to $200–300 per kilogram, far lower than existing rockets.

Based on this estimate, the transportation cost to send a 200MW-class data center into orbit would be about $5–7.5 billion, still significantly lower than the total investment required to build a similar-scale AI supercomputer on Earth ($15–25 billion).

The story is always beautiful, but reality presents numerous challenges.

Beyond the aforementioned heat dissipation and cosmic rays, the first issue proponents of the "space computing power" narrative must consider is rocket explosions and accidents—the cost of such low-probability events would far exceed the value brought by increased launch capacity, especially considering 1GW of computing power on Earth is worth up to $50 billion.

From this perspective, everything returns to the original point: the "economic problem".

Regarding heat dissipation, while it might be possible to utilize the deep space's low temperatures, this raises another issue—distance is too great, requiring another more efficient energy source, such as nuclear power, to replace solar.

As for radiation, although it can be mitigated through radiation hardening, this adds extra technical challenges and costs beyond standard GPUs.

"Radiation hardening, the materials and processes themselves are expensive, and there's an upper limit to reinforcement; it's never as effective as the atmosphere's radiation protection," said the aforementioned researcher.

To address this, SpaceX is leveraging Tesla's experience with automotive-grade AI chips: Triple Modular Redundancy (TMR) architecture and real-time verification significantly improve radiation resistance.

02

Silicon Valley Capital Pushers "Get On Board"

SpaceX's new space computing power narrative is receiving a strong response from Wall Street and Silicon Valley capital.

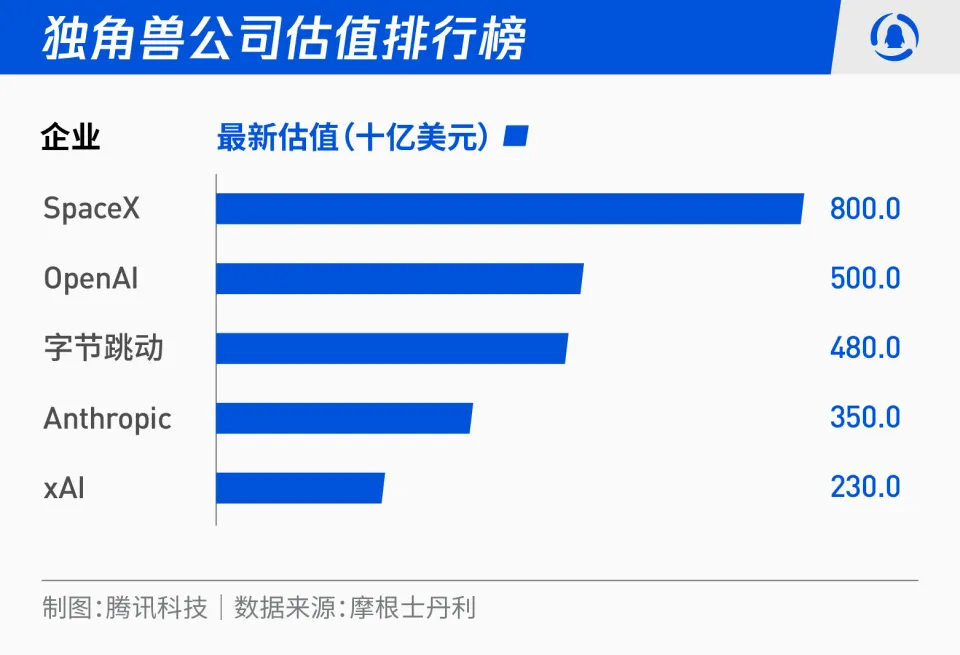

Investment bank Morgan Stanley emphasized in a recent research report that the market's repricing of SpaceX's valuation essentially stems from the renewed expansion of its commercial boundaries. The "orbital data center" has become a new AI infrastructure narrative driving its valuation surge.

Wall Street has responded positively to Musk's new story for SpaceX. The most representative example is Cathie Wood's Ark Investment.

Ark's latest model explicitly values SpaceX entirely as a high-growth software company and AI infrastructure company, rather than a traditional aerospace or telecommunications enterprise. Almost all the新增valuation in their model comes from the "Space AI Computing Power" business line.

As long-time investors in Musk, they made aggressive scenario assumptions: By 2030, Starlink terminal users reach 120 million, contributing $300 billion in annual revenue; Orbital data centers will bring an additional $80–120 billion in revenue, with a net profit margin exceeding 70%, far higher than ground-based cloud services.

Ark Investment believes the key point is reducing Starship launch costs to below $100 per kilogram. After that, the scaled deployment of space computing power will experience exponential growth, similar to Amazon Web Services (AWS) after its launch, pushing SpaceX to become a $2.5 trillion-level company.

On the other hand, Silicon Valley mogul Peter Thiel's influence on SpaceX is more strategically significant.

As one of the earliest and most crucial external investors, Thiel provided a lifeline in 2008 when SpaceX faced three consecutive launch failures and was on the verge of bankruptcy, investing $20 million through Founders Fund as a timely rescue. He has since made multiple additional investments in SpaceX.

Thiel's support for SpaceX goes far beyond money itself; it also comes with the authoritative endorsement of the Silicon Valley ideology. Peter Thiel's involvement lends credibility to the "space computing power" narrative. More importantly, Thiel leverages his "Silicon Valley-Washington" influence to secure key policy and regulatory space for SpaceX.

The backing of these capital大佬相当于给SpaceX 1.5万亿美元的估值贴上了“可信”的标签. Therefore, Morgan Stanley noted in the aforementioned report that while Musk denied the rumored "$800 billion valuation," he was更多的是否认“融资行为”,并未否认估值本身.

Progress on Starship and Starlink, acquisition of global direct-to-cellular spectrum, the orbital data center becoming a new narrative, and SpaceX's overwhelming capability of capturing 90% of global launch mass have convinced capital markets that these variables will become the "main artery of future AI infrastructure".

03

Competition, Bubbles, and Risks

Over the past few years, Musk hasn't been the only one pushing the development of space computing power.

As early as 2021, the Barcelona Supercomputing Center (BSC) in Europe and Airbus Defence and Space jointly launched the GPU4S (GPU for Space) project, funded by the European Space Agency (ESA), to verify the feasibility of embedded GPUs in aerospace applications.

The project produced an open-source benchmark suite, GPU4S Bench, for evaluating performance in image processing, autonomous navigation, etc., and yielded the open-source benchmark test suite OBPMark adopted by ESA, laying the foundation for Europe's technological autonomy in orbital computing.

On November 2, 2025, a SpaceX Falcon 9 successfully launched the first experimental satellite, Starcloud-1, for startup Starcloud into low Earth orbit. The satellite carried an NVIDIA H100 GPU, marking the first verification of in-orbit AI data processing capability. It is reported that NVIDIA and Starcloud jointly developed a combined vacuum heat dissipation architecture, using highly thermally conductive materials on the satellite's exterior to conduct heat to the surface for dissipation via infrared radiation.

As Musk's old rival in the commercial space race, Jeff Bezos is also pushing Blue Origin to develop orbital AI data center technology, planning to use space-based solar power to fuel large-scale AI computing power.

He predicts that within the next 20 years, the cost of orbital data centers could be lower than ground facilities.

Another opponent in the AI field, OpenAI CEO Sam Altman, is also eager, considering acquiring rocket company Stoke Space to send AI computing payloads into space.

It can be said that the path for SpaceX to reach its $1.5 trillion valuation via "space computing power" is full of challengers, but the most direct opponent at this stage is Amazon's Project Kuiper.

Public information shows that Kuiper plans to deploy 3,200 satellites between 2026 and 2029 through launch agreements with Blue Origin. Its biggest advantage lies in AWS's global cloud ecosystem, which can offer enterprises a "ground + space" hybrid computing power offering.

But in Thiel's view, Kuiper still belongs to an "extension of the traditional cloud," relying on an Earth-infrastructure mindset that hasn't changed. Whereas SpaceX's space AI is a completely new paradigm: moving the computing center itself into orbit, making Earth data centers a supplementary layer. This paradigm difference determines the ceiling for both sides in the future "orbital cloud" competition.

Beyond technical and competitive challenges, regulatory issues must be considered—including orbital debris management, international spectrum coordination, and space militarization controversies—any of which could affect SpaceX's progress节奏in the next two to three years.

Returning to SpaceX: From the "Starlink IPO" in 2019, to "Starship is the core asset" in 2022, to the current "space data centers will become the cheapest AI computing power," Musk has rewritten SpaceX's narrative three times in the past six years, almost每一次都从质疑变成现实原型 (each time transforming from skepticism to a reality prototype).

Now, he is tying together Tesla's chip capabilities, xAI models, Starlink bandwidth, and Starship launch capacity into a unified strategy, targeting the most expensive resource of the AI era—low-cost computing power.

Wall Street has already started betting—the question is, can the richest man's new version of the "space dream" once again take shape?